What is a crawler?

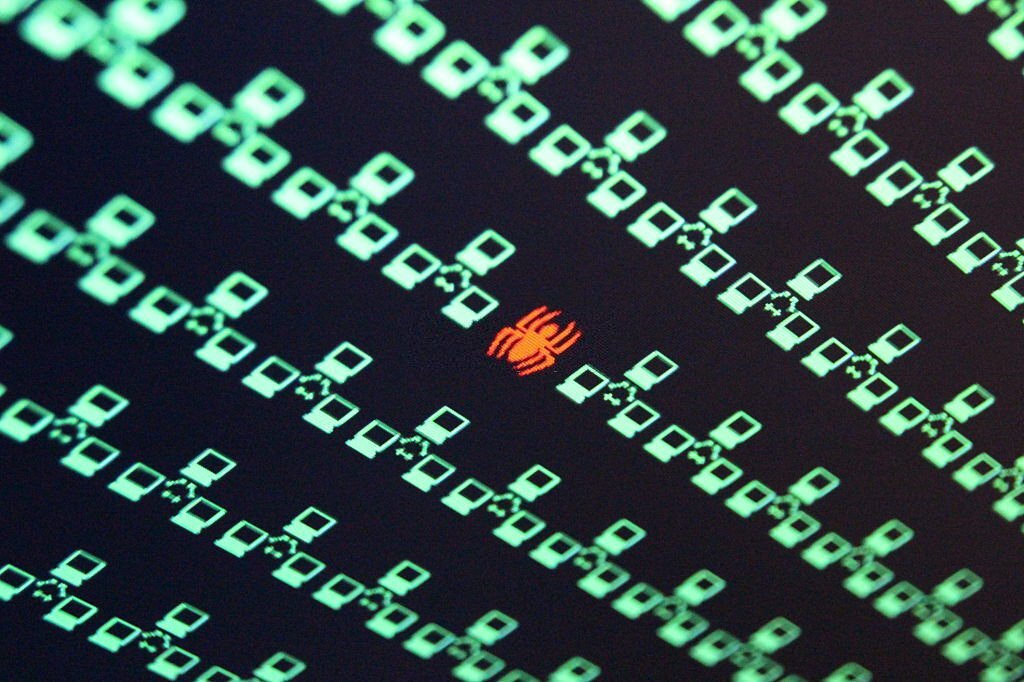

A crawler is a program that crawls a website and gathers information to determine the display ranking of search results.

* It is also called a “robot” or a “spider (because it moves around web pages that are stretched like spider webs)”.

Various crawlers exist in search engines such as Google, Yahoo!, and Bing, and are patrolling websites around the world. It then analyzes the information it collects and evaluates websites based on algorithms (programs that rank web pages).

Based on the results, the answers that best match the user’s search are displayed in order of rating.

The following is an easy-to-understand summary of the process from the creation of the web page until it is reflected in the search results.

- Crawler collects and stores information on web pages

- Evaluate web pages according to algorithms

- Displayed on the search screen in order of evaluation

No matter how good an article you create or rewrite with the best SEO measures, if the crawler does not recognize the web page, it will be evaluated by the search engine and displayed in the search results. neither.

That’s why it’s so important to let crawlers know about your website.

Crawler type

| Search engine | crawler |

|---|---|

| Googlebot | |

| Yahoo! | Yahoo! Slurp *Yahoo! |

| Bing (Microsoft) |

Bingbot |

| Baidu _ |

Baidu spider |

| Apple | Applebot |

The above is a summary of popular search engines and their crawlers in Japan.

Each search engine has a crawler, which crawls websites around the world and collects information on web pages.

It should be noted that Google, the most used search engine in the world, is used by 76.19% of people in Japan. Yahoo! is the runner-up with 14.49%, followed by Microsoft’s search engine Bing with 8.76%.

About 3/4 or more people use Google for searching, so it is very important to be conscious of Google and Google users when creating web pages .

And in order to be evaluated by Google, it is important to let Googlebot (Google’s crawler) know, which is the first step.

How search engines work

So, how does a search engine like Google recognize websites and present search results in response to the search user’s question?

Here, we will introduce how the search engine is made according to the “mechanism of how search organizes information” compiled by Google .

First, Google’s crawler, Googlebot, acquires information on some web pages, moves to the link destination from the links on those pages, and acquires information on that web page. This patrol and information acquisition is called crawling.

Moving from link to link and obtaining information are repeated, and billions of web pages are stored in Google as a large chunk of information.

This saved state is the state indexed by Google.

When a user searches for a question, the websites indexed by Google that are judged to be suitable are ranked and answered.

When Google determines its ranking, it ranks after more than 200 questions .

Below is an example question.

- Is the keyword in the title or URL?

- Are keyword synonyms included?

- What is the quality of the website that has the web page to display?

- what is the page rank

The results of these questions are combined and displayed to the user in descending order of the overall score.

Currently, a robot-type search engine that has been programmed to perform this series of steps from search to display of results takes only 0.5 seconds.

What to do to get the crawler to find you

In order to be displayed in the search results, the first condition is that it is found and indexed by the crawler. The ability to be easily discovered by crawlers is called “improved crawlability . “

Here are some important points to improve crawlability.

1. Create quality content

Of course, it is important that the content of the web page is of good quality, but the internal structure of the web page is important for crawlability.

Specifically, let’s create quality content with the following in mind.

- Unify URLs

- Make the website a three-step structure

- Install necessary internal links

- Configure breadcrumbs

- Remove invalid links

“Uniform URL” may cause two URLs to exist on the same web page without you noticing.

(Example: some with www. and some without)

Having two URLs with the same content wastes the crawler’s limited resources, and duplicate content lowers your ranking from Google. If you have duplicate content, let’s unify the URL with “301 redirect”.

Next, the “3-step structure of the website” is to set up so that users can access the page they want to go to within 2 clicks when they visit the top page. The image is that important keywords and content are set in a shallow hierarchy, and then spread to a deeper hierarchy like the branches of a tree.

As a result, the crawler will frequently visit the shallow hierarchy and check every corner of the web page derived from it.

The important thing in the three-step structure is to set up “internal links” where necessary . By installing internal links, not only crawlers but also users can navigate your website and find the information they need, which will increase your Google ranking.

Once the 3-step structure is in shape, let’s do “Breadcrumbs settings”. A breadcrumb is a list that makes it easy to understand where the search user is now. By installing this, the crawler will also recognize which layer you are on, so crawling will be smooth.

Finally, let’s remove the “dead link”.

If you introduce a link that invalidates the page by the internal link or external link installed on your website, both users and crawlers will move in vain and will not be able to get an answer, which is inconvenient. It will be evaluated as a kind website.

Make sure to check regularly if the links you attach are dead .

By improving the internal structure in this way, the crawler will be able to patrol smoothly.

If you can crawl smoothly, the crawler will crawl your website with high frequency.

2.Creating a sitemap

| XML sitemap | HTML sitemap | |

|---|---|---|

| Purpose | To be easily found by search engines | Improved convenience for search users |

| SEO effect | effective | little effect |

| content | Information such as update frequency and web page priority is summarized in an XML format file. | You can check the contents of the website in a list format. |

| from user | can not see | appear |

Another important aspect of crawlability is creating and setting up a site map on your website .

There are two types of sitemaps, one is an XML sitemap and the other is an HTML sitemap. The difference between the two is summarized in the table above, but the main difference is the purpose.

The XML sitemap is set up so that crawlers can easily find it, and it has an internal structure that is invisible to search users.

On the other hand, the HTML sitemap is set up for search users, and is the “sitemap” on popular websites such as Rakuten Ichiba and docomo. Since the contents of the website are listed by category in the HTML sitemap, users can search and access the information they want to find from the list.

By creating these two sitemaps, movement within your website will become smoother, and crawlers will be able to patrol easily.

Crawler patrol confirmation method

After taking measures to be discovered by the crawler, let’s check whether it was crawled properly this time.

Here, we will introduce how to check whether a specific web page has been discovered (whether it has been crawled).

1.site:

Enter the URL of the WEB page you want to check whether it is crawled in the search window of the search engine.

Be sure to add [site:] before the URL .

If your web page is not displayed when you press the search button, it means that crawlers and indexes are not being performed.

2. Google Search Console

Log in to Google’s Search Console.

Then enter the URL of the web page you want to check whether it is crawled in the search window and press the Enter key.

If it is crawled and indexed, the message [URL is registered with Google] will appear.

Conversely, if the message [URL is not registered with Google] is displayed, it means that the index has not been completed, so please request indexing from that screen.

Effective SEO measures that can be understood from Google’s philosophy

Google’s philosophy can be confirmed on the “10 facts about Google” page on Google’s official website.

Google is constantly striving every day to ensure that it does not deviate from this philosophy.

Therefore, when implementing SEO measures, it is important for us to always be aware of whether we are in line with Google’s philosophy.

The top of the 10 listed philosophies is “Focus on the user and everything else will follow.”

Google cites ‘user convenience’ as the most important point .

In order to improve the user experience, Google wants to provide quality content that best suits the needs of users, so creating quality content that matches it is paramount.

However, even if you create and rewrite high-quality content, it is useless if the web page is not indexed because crawlers do not come around.

Create a website with an awareness of what kind of structure is good for the crawler to crawl smoothly, and what kind of content will improve user convenience.

Summary

This time I talked about one of the mechanisms of the search engine, the crawler.

Your web page will be recognized by Google for the first time when the crawler comes around.

No matter how good an article you publish, if the crawler doesn’t find it, your web page won’t be indexed by Google or ranked and displayed in search results.

Make sure your web pages are crawled and indexed regularly.